From real-time translation and AI-enhanced visuals to deeper Shortcuts integration and developer model access, the upgrade blends technical sophistication with privacy-first design.

Unveiled today, the update brings new generative features including Live Translation in Messages and Phone, a smarter Image Playground, and visual intelligence capable of understanding on-screen content. Developers can now access Apple’s on-device foundation model directly, offering privacy-friendly, offline capabilities with no API costs. The rollout continues Apple’s push to integrate intelligence into daily use cases without compromising user control.

Live Translation Reaches Messages, FaceTime, and Phone

One of the most user-facing enhancements is Live Translation. It brings real-time multilingual communication to Messages, FaceTime, and Phone—offering live captions, spoken translation, and typed message conversion. Crucially, all translation occurs on-device, aligning with Apple’s emphasis on privacy by design.

On FaceTime, users can follow along via translated captions while still hearing the speaker. On Phone calls, the spoken translation plays aloud in real time, while Messages auto-translate in both directions during chats. This could significantly improve international travel, remote collaboration, and inclusive communication—particularly as support expands to more languages by year’s end.

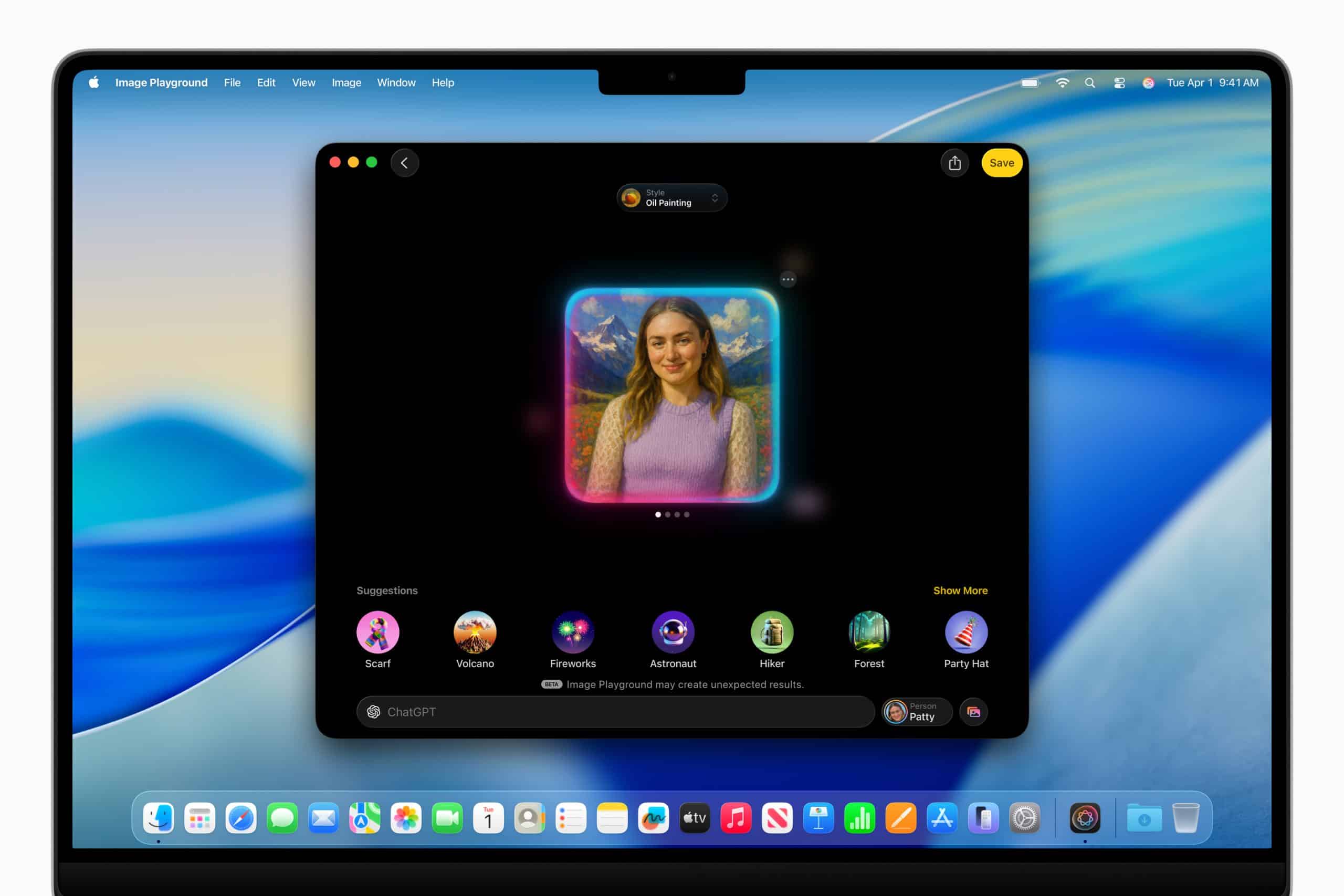

Image Playground & Genmoji Get a Creative Boost

Apple’s generative image tools also see upgrades. Genmoji creation now allows emoji mixing and attribute control—like adjusting a hairstyle or facial expression. Meanwhile, Image Playground introduces “Any Style,” letting users describe custom image styles and generate graphics via ChatGPT, only after explicit user permission. Apple maintains control by keeping most processes on-device unless ChatGPT is intentionally accessed.

Visual Intelligence Comes to the Entire Screen

Building on the existing visual intelligence capabilities, Apple Intelligence can now understand what’s on the user’s screen across apps. That means identifying objects, parsing event data for calendar entries, or enabling direct product lookups on platforms like Google or Etsy. A simple press of the screenshot buttons activates the feature, offering users options to save, share, or explore further.

Fitness Meets Intelligence on Apple Watch

The new Workout Buddy feature brings generative insights to workouts by analysing real-time metrics—heart rate, pace, activity rings—and delivering feedback through synthetic trainer voices. Powered by Apple Intelligence and a new text-to-speech model, it offers a personalised experience that adapts to the user’s performance and goals, all processed locally.

Developers Can Now Tap Apple’s On-Device AI

Perhaps the biggest shift is under the hood: developers now get access to Apple’s on-device foundation model via the Foundation Models framework. With support for Swift, apps can use as few as three lines of code to integrate intelligent features like summarisation, search, or generation—without relying on cloud APIs or external inference engines.

This privacy-forward approach allows for intelligent features even when users are offline. It lowers the barrier to entry for developers while maintaining Apple’s strict privacy standards—offering guided generation, tool calling, and more.

Shortcuts Become Truly Smart

Shortcuts now leverage Apple Intelligence directly, allowing users to automate tasks with AI-generated inputs. A user could, for example, build a shortcut to compare lecture notes with a class recording transcription and automatically append missing information. With on-device processing and optional ChatGPT integration, the system balances intelligence and control.

Additional Enhancements Across Apps

Apple Intelligence now enables:

- Intelligent suggestions in Mail and Messages

- Context-aware polls in Messages

- Order tracking summaries in Wallet

- Writing Tools with natural editing via Describe Your Change

- Smart replies and summarisation in emails and call transcriptions

- Contextual search and calendar creation from visuals

- A transparent approach to Private Cloud Compute, with verifiable code and no data storage

This update marks a pivotal moment: Apple is offering developers and users deep AI capabilities without ceding privacy. By combining generative features with offline operation, native integration, and user-first control, Apple positions itself apart from traditional AI players.

The capabilities go live in beta today for developers, with a public rollout planned for this autumn across iPhone 16 models, iPhone 15 Pro/Pro Max, and M1+ iPads and Macs.